ArXiv Abstract Similarity Engine

ArXiv Abstract Similarity Engine is a final project created by three master students fall semester 2016 in the course 02805 Social graphs and interactions, run by Sune Lehmann at the Technical University of Denmark. The two main topics of the course (besides python programming) is Natural Language Processing and Network Science. The project has hence been thought out to leverage the techniques we've learned in those topics.

“I would found an institution where any person can find instruction in any study.”

EZRA CORNELL, 1868

The arXiv (pronounced archive, X being the greek letter χ/chi) is a repository of electronic preprints, known as e-prints, of scientific papers. The repository holds, as of December 2016, 1,211,916 e-prints in the fields of: mathematics, physics, astronomy, computer science, quantitative biology, statistics, and quantitative finance. The repository is created and managed by the Cornell University Library and is, unlike other scientific repositories, available online to every person without restrictions.

The data used in this project can be found by visiting our project at github (see Links-section), or if you wish you can obtain you own data by visiting the ArXiv API manually or by using the same harvester as we did (described in the Notebook)

NLP is a field in Computer Science dealing with Computational Linguistics.

The field is gaining wide popularity the recent years as the amount of human produced text and data is rapididly increasing and the use of artificial intelligence is being used in many contexts.

In this study, we are focusing on the text abstracts of the scientific papers released to ArXiv.org. Each paper abstract will be processed with the the following steps in order to computationally decide which words are most important for the given paper.

The process of splitting a text up in units, each unit being a word. In the process special characters such as punctuation- and exclamation-marks and even numbers, are removed, leaving just the actual words.

Stop words are words that are often very common, short and do not have significant meaning to the meaning text of the text they appear in. Some of the most common Stop Word are: the, to, at, is, which, and.

Is the process of returning a word to it's root (much like stemming, but more sophisticated) treating the words "messages", "message", and "messaging" as the same word.

Last step in our NLP process is the use of the numerical method TF-IDF to decide which words/topics are most important to the abstract. Instead of using the raw frequencies of occurrence of a word in a given abstract, it is scaled down so the impact of words that occur very frequently in a given corpus (all collected papers) is hence empirically less informative than words that occur in a small fraction of the corpus.

The study of networks has emerged in diverse disciplines as a means of analyzing complex relational data.

Graphs associated with networks can be either directed or undirected. Directed graph is a graph where the edges have a direction associated with them while in a undirected graph they don't.

Once the NLP processing was done, we started building a network graph (directed), using the papers and the words of each paper as nodes.

In order for the nodes to be distinguished between each other (nodes and words), we assumed that paper-nodes always had non-zero out-degrees and word-nodes the opposite.

This graph was the basis for a new undirected graph, where all word-nodes turned into edges. Since we couldn't have multiple edges between the two same nodes, we instead set the weight of the edge. The more words in common, the higher the weight.

Furthermore we also conducted community detection on the undirected graph, which enabled us to find papers in different, and potentially unexpected fields of study.

The data collected from ArXiv.org's API (Application Programming Interface) is loaded from it's originally XML-files into the programming language python.

To make the vast amount of data (37000 papers) easier to handle, we use the statistical python package Pandas. We do however not need all the provided meta-data. A sample of five papers are show below.

| identifier | title | abstract | date | topic |

|---|---|---|---|---|

| oai:arXiv.org:1505.04344 | On the maximum quartet distance between phylog... | A conjecture of Bandelt and Dress states tha... | 2016-02-04 | cs |

| oai:arXiv.org:1505.04346 | Limits to the precision of gradient sensing wi... | Gradient sensing requires at least two measu... | 2016-04-27 | physics:physics |

| oai:arXiv.org:1505.04350 | Differentiation and integration operators on w... | We show that some previous results concernin... | 2016-04-04 | math |

| oai:arXiv.org:1505.04352 | A Coding Theorem for Bipartite Unitaries in Di... | We analyze implementations of bipartite unit... | 2016-06-09 | physics:quant-ph |

| oai:arXiv.org:1505.04355 | Resonant Trapping in the Galactic Disc and Hal... | With the use of a detailed Milky Way nonaxis... | 2016-10-31 | physics:astro-ph |

After finishing the steps explained in the NLP-section, we have the data showed to the right loaded into our program.

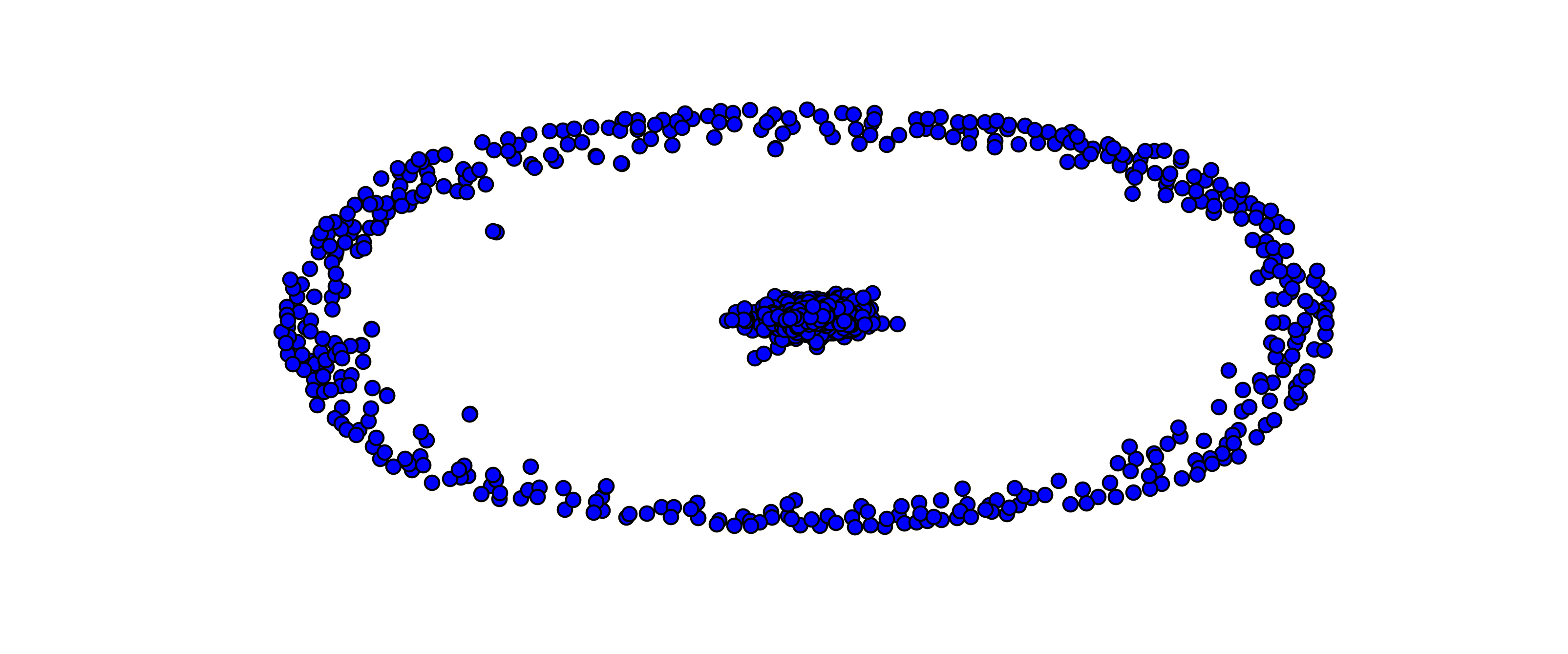

After finishing the steps explained in the Network-section, we end up with a very large network graph. There exists around one and a half million edges/links in the network, making it very heavy to work with.

As many of these relationship between papers are of a low significance (the weight) we decided to remove them do make future calculation more doable.

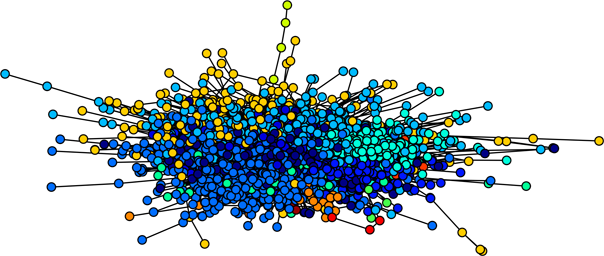

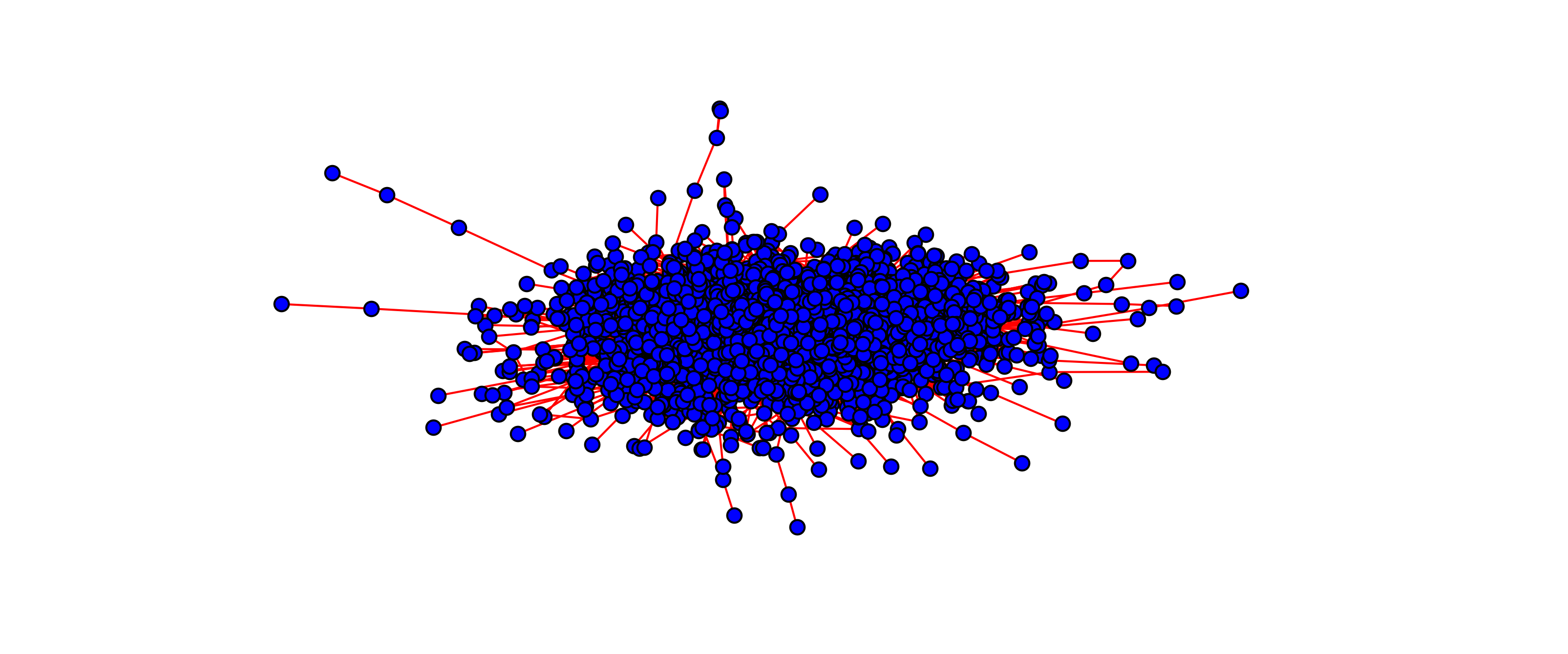

Below is the network graph visualization just after creating the network and filtering out the edges having weight less than three, because they not very significant.

As it is easily appear in the above image, there is a giant connected component, meaning that a lot papers are all tightly connected to each other. This is the center.

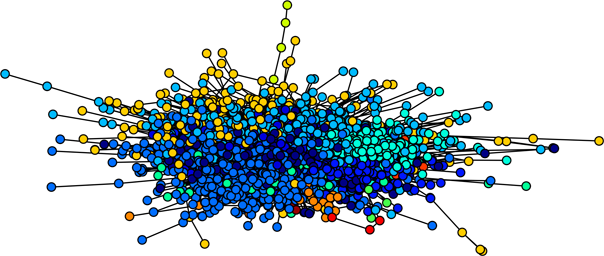

If we 'zoom in' at the center of the above graph, we get the visualization below.

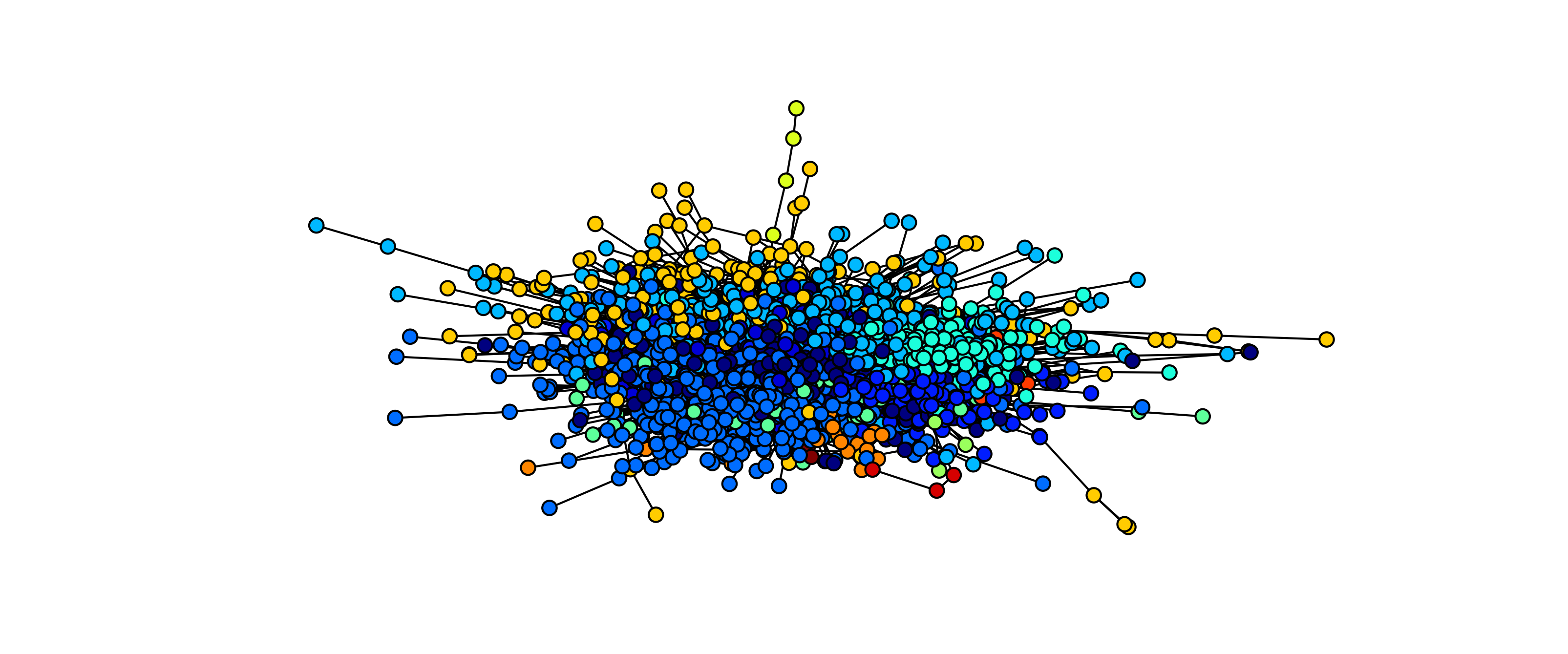

After using the louvain algorithm on this giant component, we are able to detect and distinguish between the different communities.

The communities in the graph are depicted by different colors below.

In the sampled data we managed to detect 12 communities out of 5000 papers.

Having detected different communities, we thought it might be interesting to see which words were the most common in each.

Each community evolves around many different papers from many different sciences (except for the last two) which supports our initial idea about being able to find interesting papers in different fields of study.

Below is only shown top 2 (or 1) fields of study, but the analysis notebook contains the full lists of studies and their respective amount of papers, for each community.

We are thrilled that we exceeded our expectations for the project. We managed not only to fulfill our base assumption of creating the network of scientific papers but also to analyze it further and make it a useful engine for a future product. An engine like the one netflix uses for suggesting top related movies to users based on their preferences, right before applying any Machine Learning algorithm.

We created the fundamentals of an engine that is fed with new papers every 20 seconds, stores the data in a neat format, feeds the data into the graph, defines communities of papers based on betweeness-centrality and modularity thresholds, feeds the communities into a model that assigns the most related next paper to a user based on papers he has already read.