This is an example of how we can do simple data parsing using the Unix terminal. We will use applications like chmod, find, grep, sed, apt-get, nano, cut, sort, uniq, head, tail, less, cat, ssh, wc, echo, man, awk and more.

Tip 1 - How to write a command that finds the 10 most popular words in a file

Lets suppose that we have a txt file named draft.txt with the following content.

HANGZHOU, China — The image of a 5-year-old Syrian boy, dazed and bloodied after being rescued from an airstrike on rebel-held Aleppo, reverberated around the world last month, a harrowing reminder that five years after civil war broke out there, Syria remains a charnel house.

But the reaction was more muted in Washington, where Syria has become a distant disaster rather than an urgent crisis. President Obama’s policy toward Syria has barely budged in the last year and shows no sign of change for the remainder of his term. The White House has faced little pressure over the issue, in part because Syria is getting scant attention on the campaign trail from either Donald J. Trump or Hillary Clinton.

That frustrates many analysts because they believe that a shift in policy will come only when Mr. Obama has left office. “Given the tone of this campaign, I doubt the electorate will be presented with realistic and intelligible options, with respect to Syria,” said Frederic C. Hof, a former adviser on Syria in the administration.

The lack of substantive political debate about Syria is all the more striking given that the Obama administration is engaged in an increasingly desperate effort to broker a deal with Russia for a cease-fire that would halt the rain of bombs on Aleppo.

Those negotiations moved on Sunday to China, where Secretary of State John Kerry met for two hours with the Russian foreign minister, Sergey V. Lavrov, at a Group of 20 meeting. At one point, the State Department was confident enough to schedule a news conference, at which the two were supposed to announce a deal.

But Mr. Kerry turned up alone, acknowledging that “a couple of tough issues” were still dividing them.

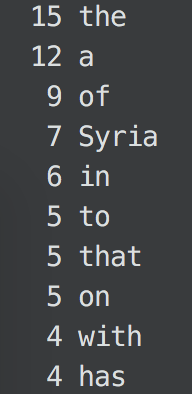

I will display the query and the output and later I will describe what each one of the applications does.

tr -c '[:alnum:]' '[\n*]'<draft.txt | sort | uniq -c | sort -nr | head -11 | tail -10

-

The command tr creates a list of the words in draft.txt, one per line, where an alphanumeric character is taken to be a maximal string of letters.

-

Then we used piping to sort the listing of words sort

-

Later with uniq -c we put each output line with the count of the number of times the line occurred in the input, followed by a single space.

-

Then sort -nr to sort the listing by numbers on descending order.

-

Lastly we used head -11 for the eleven most frequent words and tail -10 to avoid the blanks as it was calculated as the most frequent word in the document.

Tip 2 - How to write a command that removes all rows from a table where the price is more than 10,000$

Let’s again suppose that we have a txt file named cars.txt with the following content.

| plym | fury | 77 | 73 | 2500 |

| chevy | nova | 79 | 60 | 3000 |

| ford | mustang | 65 | 45 | 17000 |

| volvo | gl | 78 | 102 | 9850 |

| ford | ltd | 83 | 15 | 10500 |

| Chevy | nova | 80 | 50 | 3500 |

| fiat | 600 | 65 | 115 | 450 |

| honda | accord | 81 | 30 | 6000 |

| ford | thundbd | 84 | 10 | 17000 |

| toyota | tercel | 82 | 180 | 750 |

| chevy | impala | 65 | 85 | 1550 |

| ford | bronco | 83 | 25 | 9525 |

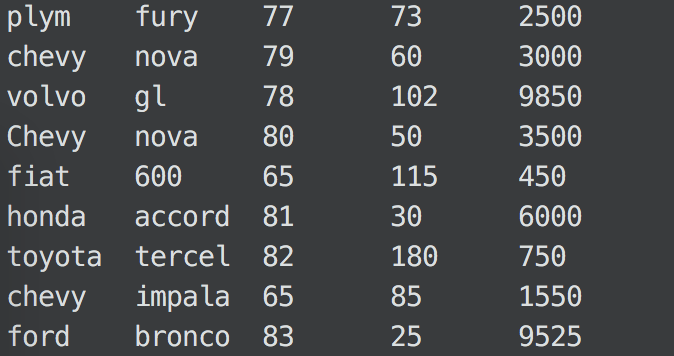

I will display the query and the output and later I will describe what each one of the applications does.

grep -vE '.* [0]*[1-9][0-9]{4,}$' cars.txt

Comparing with the initial table, one can see that the lines with the fifth column cell greater then 10000, have been removed.

We can have exactly the same output by using awk command which is a whole programming language particularly useful for dealing with inputs.

cat ./cars.txt | awk '{ if ($5 <= 10000) {print} }'

In this case, as mentioned before I used a conditional check on the fifth column $5 to test whether the number was smaller than 10000, and only in that case the program print the whole line. The lines with more than 10000 are not printed and don’t come out on the standard output.

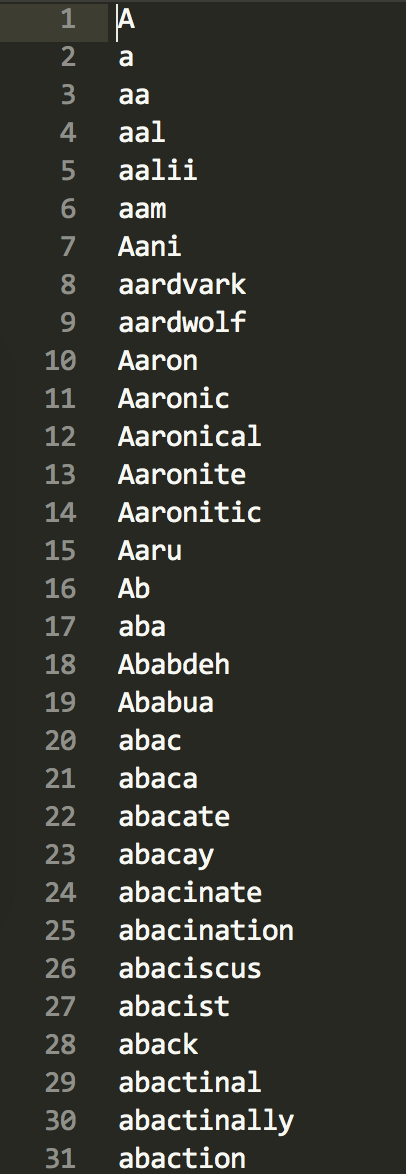

Tip 3 - Create a spell checker

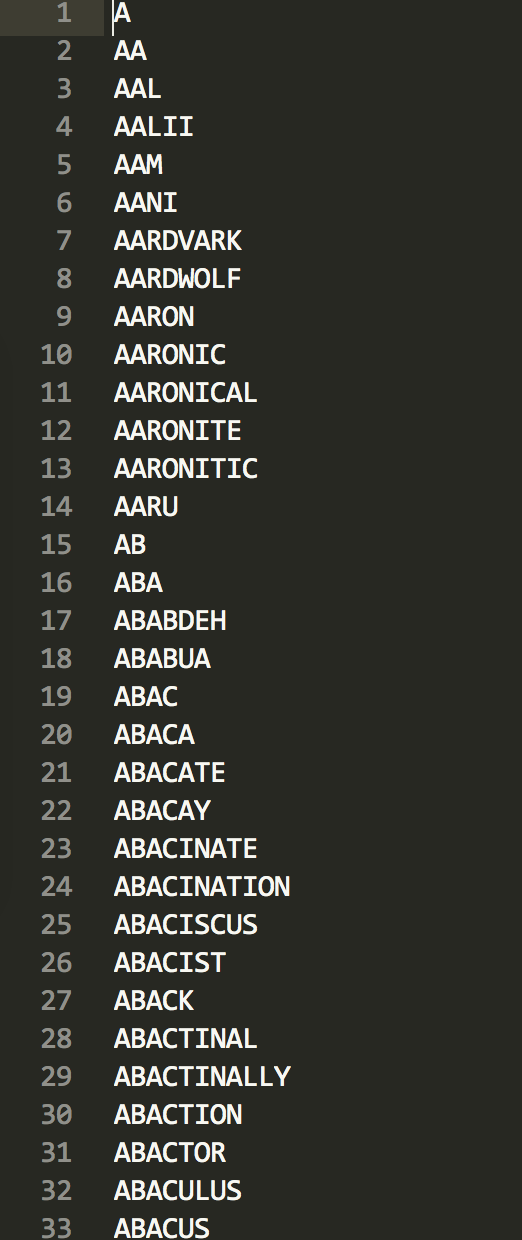

Let’s assume that we have a dictionary with correctly-spelled words like the one below. This dictionary consists of about 2 million lines, each containing a word(or a letter).

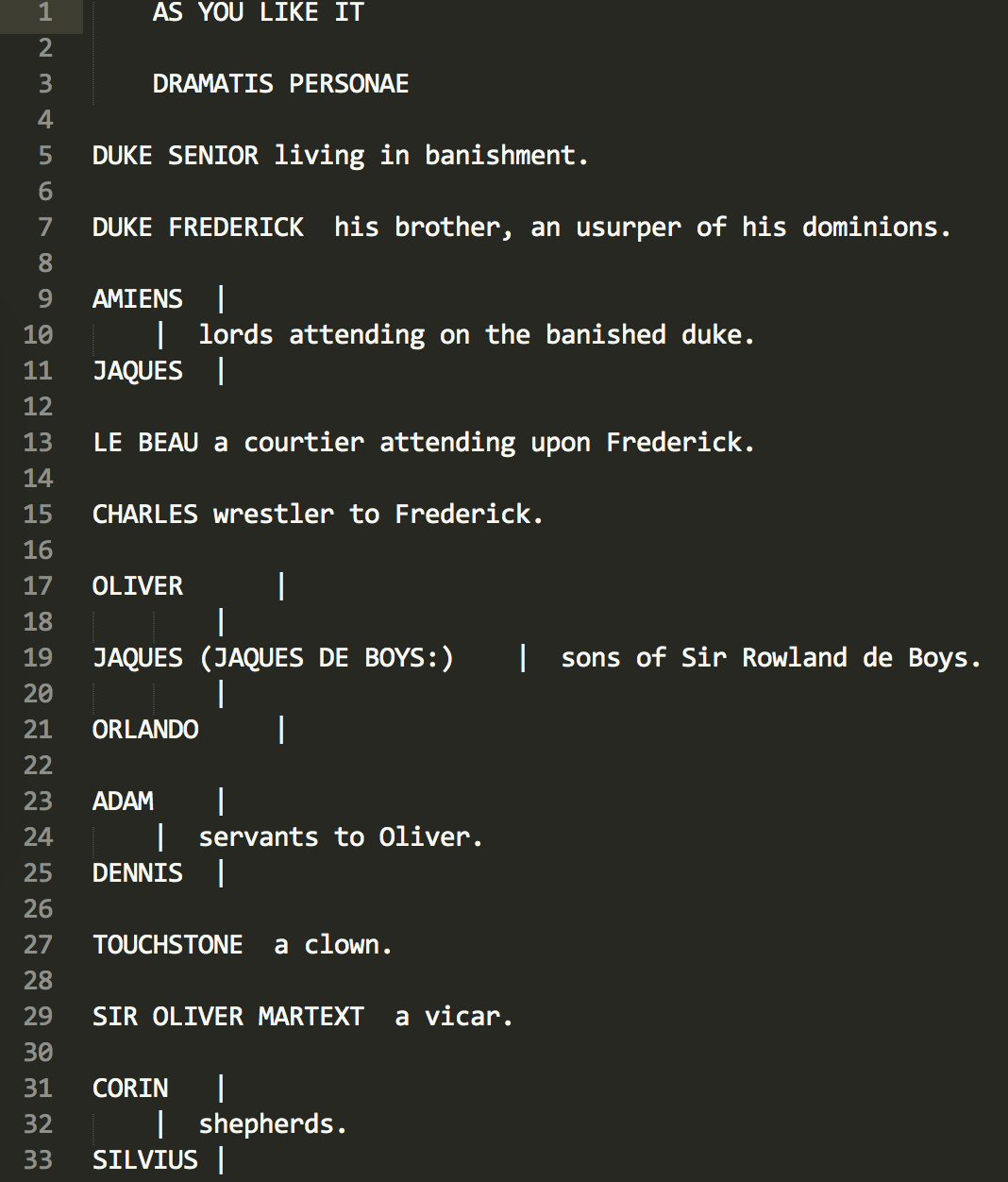

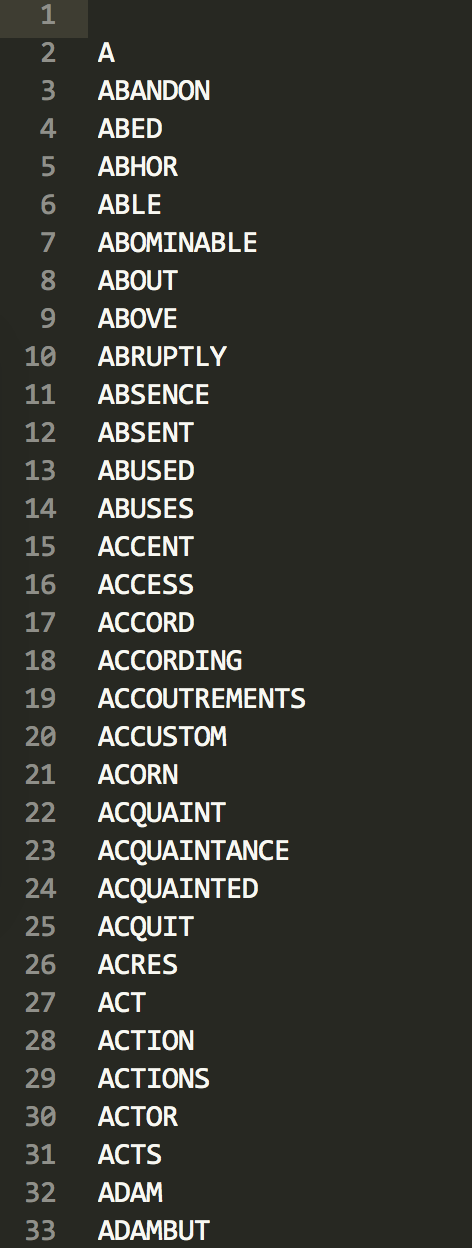

We will use this dictionary to test how many mis-spelled words are included into a random text. For this particular excercise I have chosen an expcert from Shakespear. Below you may see the first lines.

In order to check the spelling in each word, firstly we need to have both files in the same format and style. So let’s first sort the dictionary alphabetically, convert each word in upper case and then save the result in a new file named orderDict

tr 'a-z' 'A-Z' < dict | sort -u > orderDict

Then, we should tokenize each word of the shakespear.txt to the same format.

grep -oi '[a-z]*' shakespear.txt | tr 'a-z' 'A-Z' | sort -u > words.txt

Now the words.txt and the orderDict look like this.

And finally we can test their similarity by querying the following.

comm -23 words.txt orderDict | wc -l

The output is a single number of matching words. This particular document, produces 721 matches.

-

The -u option of sort removes all the repeated lines after the sorting.

-

The grep command with the option -o prints only the matching pattern, while the -i option ignores cases.

-

The -23 options for the comm utility suppress the output of the second and of both files.